I want to be able to write code like this:

IRepository<User> repository = new Repository<User>();

repository.GetById(userId).Username = "Stuart";

repository.SubmitChanges();

and like this:

return new Repository<User>()

.Where(u => u.Username == username)

.Select(u => Map(u));

I want the Repository to work with an underlying DataContext that follows a Unit of Work pattern. The UoW has to be aware of the context in which it is running (e.g. HTTP Web request or thread local). That way change tracking has a lifetime that matches my Request/Thread and my DataContext gets disposed for me when we’re done.

I also want the Repository to be auto-magically set up for me, with either an underlying SQL database or an in-memory data store (for my tests). Oh, and the in-memory store must track changes too! It’ll be no good for tests if it doesn’t work the same way.

Finally (and most importantly) the Repository has to support IQueryable so that we can work all that LINQ magic. I’ll also want to derive specialized Repositories from Repository<T> to encapsulate a bunch of canned queries – including some fluent DSL-like methods that work as filters.

I guess I don’t want too much!

We’re going to need the Repository pattern, the Unit of Work pattern, the Dependency Injection pattern and the Inversion of Control pattern. (Thanks Martin.)

This could all be improved substantially so please make suggestions in the comments. It makes a long first post – but ¨Hey!¨ It’ll be worthwhile, hopefully. I’ll also post all the code as a VS project but for now I’ll just list it here:

Let’s start with the interfaces. The Repository uses the Unit Of Work to manage the Data Source.

IDataSource<T> implements IQueryable<T> and adds the DataContext methods InsertOnSubmit() and DeleteOnSubmit() (the ones I use most). I also added a GetById() method for convenience. All the other useful stuff comes from IQueryable<T> …

public interface IDataSource<T> : IQueryable<T> where T : class, new()

{

T GetById(object id);

void InsertOnSubmit(T entity);

void DeleteOnSubmit(T entity);

}

It’s the IUnitOfWork implementation that’s responsible for pushing the changes back down to the store:

public interface IUnitOfWork : IDisposable

{

IDataSource<T> GetDataSource<T>() where T : class, new();

void SubmitChanges();

void SubmitChanges(ConflictMode conflictMode);

}

The DataSource

I was really impressed with Mike Hadlow’s implementation of GetById() – it saves you having to know what the “primary key” property is. So I abstracted a base class for it. The method generates a lambda expression on the fly that is used in a Where() method to find objects where the primary key has the specified value. (An extension method on System.Type finds me the property that is marked with Column.IsPrimaryKey). Here’s the extension method …

public static PropertyInfo GetPrimaryKey(this Type entityType)

{

foreach (PropertyInfo property in entityType.GetProperties())

{

var attributes = (ColumnAttribute[]) property.GetCustomAttributes(

typeof (ColumnAttribute), true);

if (attributes.Length == 1)

{

ColumnAttribute columnAttribute = attributes[0];

if (columnAttribute.IsPrimaryKey)

{

if (property.PropertyType != typeof (int))

{

throw new ApplicationException(

string.Format("Primary key, '{0}', of type '{1}' is not int",

property.Name, entityType));

}

return property;

}

}

}

throw new ApplicationException(

string.Format("No primary key defined for type {0}", entityType.Name));

}

And now here’s the implementation of DataSource<T> – including Mike’s GetById():

using System;

using System.Collections;

using System.Collections.Generic;

using System.Linq;

using System.Linq.Expressions;

using Library.Extensions;

public abstract class DataSource<T> : IDataSource<T> where T : class, new()

{

protected IQueryable<T> _source;

public abstract Expression Expression { get; }

public abstract Type ElementType { get; }

public abstract IQueryProvider Provider { get; }

public virtual T GetById(object id)

{

ParameterExpression itemParameter = Expression.Parameter(typeof (T), "item");

Expression<Func<T, bool>> whereExpression =

Expression.Lambda<Func<T, bool>>(

Expression.Equal(

Expression.Property(

itemParameter,

typeof (T).GetPrimaryKey().Name),

Expression.Constant(id)),

new[] {itemParameter});

return _source.Where(whereExpression).Single();

}

public abstract void InsertOnSubmit(T entity);

public abstract void DeleteOnSubmit(T entity);

IEnumerator IEnumerable.GetEnumerator()

{

return GetEnumerator();

}

public abstract IEnumerator<T> GetEnumerator();

}

So now we need 2 subclasses of DataSource – one for the SQL store and one for the in-memory store. The IoC container will build the Repository with the relevant DataSource and DataContext when the time comes (more later).

Firstly the DatabaseDataSource really just delegates to the relevant Table<T> (which is IQueryable) from the underlying DataContext:

using System;

using System.Collections.Generic;

using System.Data.Linq;

using System.Linq;

using System.Linq.Expressions;

namespace Library.Linq

{

public class DatabaseDataSource<T> : DataSource<T> where T : class, new()

{

private readonly DataContext _dataContext;

public DatabaseDataSource(DataContext dataContext)

{

_dataContext = dataContext;

if (dataContext == null)

throw new ArgumentNullException("dataContext");

_source = _dataContext.GetTable<T>();

}

public override Type ElementType

{

get { return _source.ElementType; }

}

public override Expression Expression

{

get { return _source.Expression; }

}

public override IQueryProvider Provider

{

get { return _source.Provider; }

}

public override IEnumerator<T> GetEnumerator()

{

return _source.GetEnumerator();

}

public override void InsertOnSubmit(T entity)

{

((Table<T>) _source).InsertOnSubmit(entity);

}

public override void DeleteOnSubmit(T entity)

{

((Table<T>) _source).DeleteOnSubmit(entity);

}

}

}

Then the InMemoryDataSource does a similar thing but with a Dictionary of TrackedObjects – each of which can accept changes. (This is what I want for my Unit Tests.)

using System;

using System.Collections;

using System.Collections.Generic;

using System.Linq;

using System.Linq.Expressions;

namespace Library.Linq

{

public class InMemoryDataSource<T> : DataSource<T>, ITrackingContainer where T : class, new()

{

private readonly IDictionary<T, TrackedObject<T>> _trackedObjects

= new Dictionary<T, TrackedObject<T>>();

public InMemoryDataSource() : this(new List<T>())

{

}

public InMemoryDataSource(List<T> source)

{

if (source == null)

throw new ArgumentNullException("source");

source.ForEach(Track);

_source = _trackedObjects.Keys.AsQueryable();

}

public override Type ElementType

{

get { return _source.ElementType; }

}

public override Expression Expression

{

get { return _source.Expression; }

}

public override IQueryProvider Provider

{

get { return _source.Provider; }

}

IDictionary ITrackingContainer.Data

{

get { return _trackedObjects; }

}

public override IEnumerator<T> GetEnumerator()

{

return _source.GetEnumerator();

}

public override void InsertOnSubmit(T entity)

{

Track(entity);

_trackedObjects[entity].ChangeState(TrackedState.Added);

}

public override void DeleteOnSubmit(T entity)

{

Track(entity);

_trackedObjects[entity].ChangeState(TrackedState.Deleted);

}

private void Track(T entity)

{

if (!_trackedObjects.ContainsKey(entity))

_trackedObjects.Add(entity, new TrackedObject<T>(entity));

}

}

public interface ITrackingContainer

{

IDictionary Data { get; }

}

}

The TrackedObject (listed below) could definitely be improved – currently it just does some basic change tracking using the enum TrackedState:

namespace Library.Linq

{

internal class TrackedObject<T> : ITrackedObject where T : class, new()

{

private readonly T _inner;

private TrackedState _state;

public TrackedObject(T trackedObject)

{

_inner = trackedObject;

}

public T Inner

{

get { return _inner; }

}

public TrackedState State

{

get { return _state; }

}

object ITrackedObject.Inner

{

get { return _inner; }

}

public void ChangeState(TrackedState state)

{

_state = state;

}

public void AcceptChanges()

{

_state = TrackedState.Undefined;

}

}

internal enum TrackedState

{

Undefined,

Added,

Modified,

Deleted

}

}

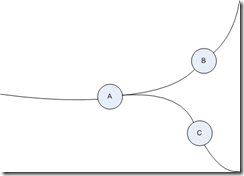

Here’s the class diagram for the DataSource side of things:

I was going to divide this into 2 posts but couldn’t really see the point as we’re nearly there (and it get’s much better from here on), so on we go.

The Unit Of Work

First the classes derived from IUnitOfWork. We’re going to need one for the real database and one for the tests. The DatabaseUnitOfWork also has a dependency on the LINQ DataContext – but the IoC container will take care of that.

using System;

using System.Data.Linq;

namespace Library.Linq

{

public class DatabaseUnitOfWork : IUnitOfWork

{

private readonly DataContext _dataContext;

public DatabaseUnitOfWork(DataContext dataContext)

{

if (dataContext == null)

throw new ArgumentNullException("dataContext");

_dataContext = dataContext;

}

public IDataSource<T> GetDataSource<T>() where T : class, new()

{

return new DatabaseDataSource<T>(_dataContext);

}

public void SubmitChanges()

{

_dataContext.SubmitChanges();

}

public void SubmitChanges(ConflictMode conflictMode)

{

_dataContext.SubmitChanges(conflictMode);

}

public void Dispose()

{

_dataContext.Dispose();

}

}

}

The InMemoryUnitOfWork is more interesting as it manages each InMemoryDataSource<T> (like the DataContext manages each Table<T>):

using System;

using System.Collections.Generic;

using System.Data.Linq;

using System.Linq;

namespace Library.Linq

{

public class InMemoryUnitOfWork : IUnitOfWork

{

private readonly IDictionary<Type, IQueryable> _dataSources

= new Dictionary<Type, IQueryable>();

private readonly object _lock = new object();

public IDataSource<T> GetDataSource<T>() where T : class, new()

{

lock (_lock)

{

if (!_dataSources.ContainsKey(typeof (T)))

_dataSources.Add(typeof (T), new InMemoryDataSource<T>());

return _dataSources[typeof (T)] as InMemoryDataSource<T>;

}

}

public void SubmitChanges()

{

SubmitChanges(ConflictMode.FailOnFirstConflict);

}

public void SubmitChanges(ConflictMode conflictMode)

{

foreach (var pair in _dataSources)

{

var trackingContainer = (ITrackingContainer) pair.Value;

foreach (ITrackedObject trackedObject in trackingContainer.Data.Values)

trackedObject.AcceptChanges();

}

}

public void Dispose()

{

}

}

}

The Repository

Finally the Repository<T> implementation which coordinates the IUnitOfWork and IDataSource<T>. Note that we’re introducing the IoC (or more correctly ¨DI¨) container here. This example uses Unity but any DI container will do exactly the same job. Unity is great, simple, quick and perfectly good for the job. (I’m also getting into StructureMap right now which looks great – version 2.5 is very modern (!) and it gets you away from the traditionally over-used XML config). In Windsor and StructureMap you can specify that your object’s lifetime follows the web request (StructureMap even has a hybrid mode that gives you a web request lifetime if there is an HttpContext and a ThreadLocal if not) – with Unity I had to jump through a few hoops. In the meantime (please contain your excitement) here’s the Repository listing…

using System;

using System.Collections;

using System.Collections.Generic;

using System.Data.Linq;

using System.Linq;

using System.Linq.Expressions;

using Library.IoC;

using Microsoft.Practices.Unity;

namespace Library.Linq

{

public class Repository<T> : IRepository<T> where T : class, new()

{

private readonly IDataSource<T> _source;

private readonly IUnitOfWork _unitOfWork;

public Repository()

{

_unitOfWork = ((IContainerAccessor) Context.Current).Container.Resolve<IUnitOfWork>();

_source = _unitOfWork.GetDataSource<T>();

}

[InjectionConstructor]

public Repository(IUnitOfWork unitOfWork)

{

_unitOfWork = unitOfWork;

_source = _unitOfWork.GetDataSource<T>();

}

public IDataSource<T> Source

{

get { return _source; }

}

IEnumerator<T> IEnumerable<T>.GetEnumerator()

{

return _source.GetEnumerator();

}

public IEnumerator GetEnumerator()

{

return _source.GetEnumerator();

}

public Expression Expression

{

get { return _source.Expression; }

}

public Type ElementType

{

get { return _source.ElementType; }

}

public IQueryProvider Provider

{

get { return _source.Provider; }

}

IDataSource<T1> IUnitOfWork.GetDataSource<T1>()

{

return (IDataSource<T1>) _source;

}

public virtual void SubmitChanges()

{

_unitOfWork.SubmitChanges();

}

public virtual void SubmitChanges(ConflictMode conflictMode)

{

_unitOfWork.SubmitChanges(conflictMode);

}

public T GetById(object id)

{

return _source.GetById(id);

}

public void InsertOnSubmit(T entity)

{

_source.InsertOnSubmit(entity);

}

public void DeleteOnSubmit(T entity)

{

_source.DeleteOnSubmit(entity);

}

public void Dispose()

{

_unitOfWork.Dispose();

}

}

}

The constructor finds the relevant Container and gets it to resolve the current Unit of Work. It does this because you don’t want to. At point of use – you really don’t care about IoC, UoW, or any other TLAs.

Dependency Injection

So how do we use all this? Well, there’s no more to it than I alluded to at the beginning of the post. Every time you construct a new Repository, the IoC container will connect it to the relevant Unit Of Work and DataSource for you. Automatically. You can just use it and all will be just fine. In your app with the real DB or in your tests with an in-memory store.

Here’s how I configured Unity to set it up for me (obviously in your test assemblies you would register the in-memory equivalents). This is in Global.asax:

private static readonly IUnityContainer _container = new UnityContainer();

protected void Application_Start(object sender, EventArgs e)

{

_container

.RegisterType<DataContext, MyDataContext>()

.Configure<InjectedMembers>()

.ConfigureInjectionFor<MyDataContext>(

new InjectionConstructor(ConfigurationManager.ConnectionStrings["MyConnStr"].ConnectionString));

((IContainerAccessor)Library.IoC.Context.Current).Container = _container;

}

protected void Application_BeginRequest(object sender, EventArgs e)

{

IUnityContainer childContainer = _container.CreateChildContainer();

childContainer

.RegisterType<IUnitOfWork, DatabaseUnitOfWork>(new ContainerControlledLifetimeManager());

((IContainerAccessor) Library.IoC.Context.Current).Container = childContainer;

}

protected void Application_EndRequest(object sender, EventArgs e)

{

IUnityContainer container = ((IContainerAccessor) Library.IoC.Context.Current).Container;

if (container != null)

container.Dispose();

}

The interesting things to note here are related to the life-time management of the Unit of Work. The parent Unity Container has application scope and will be used to resolve anything that the child container (which has request scope) cannot. This involves resolving the DataContext and injecting the DB connection string into its constructor.

A new child container is created for each incoming HTTP request and is instructed to create a new DataBaseUnitOfWork for the lifetime of the child container (which happens to equal the lifetime of the request because the child container is disposed at the end of the request). When a container is disposed, any objects to which it holds a reference will also get disposed, so the UnitOfWork, which implements IDisposable, gets disposed and any outstanding change tracking is lost. That’s exactly what we want – it’s up to you to decide when to call SubmitChanges() on the Repository and you can do that as often and whenever you want – each one encapsulating an atomic transaction with the DB. You can even call SubmitChanges() like this:

new Repository<User>().SubmitChanges();

and any outstanding changes will get flushed inside a new transaction. The point is that it doesn’t matter how many times you “new up” a Repository, you’re still working with the same underlying DataContext.

Creating child Unity Containers doesn’t seem to be expensive – and it doesn’t seem to matter how many of them you create. Let me know if you find anything bad about this though! The Context implementation is not listed here (but see update below), but it’s reasonably trivial and is in the downloadable project (if I can find somewhere to host it!).

I’m really interested in your comments. I know we can make this better. At point of use, it seems to me, it’s nice and simple. The implementation, though, can always be improved.

Update: The Context class is simply a static class with one property (called Current) whose backing field is marked as ThreadStatic. At the start of each web request (in Global.asax) a reference to the child container is stored in this field which remains local to the thread that the request is running on – each new request will get a thread from the thread pool and have it’s obsolete reference replaced with one to the newly created child Container specific to the incoming request.

Having done Biology at University rather than Computer Science, I regret having spent most of my career not really understanding why functional programming is so important. I knew it was the oldest paradigm around, but assumed that it was just for mathematicians and scientists – business software needed something far better: imperative programming and object-orientation. How naive was I?

Having done Biology at University rather than Computer Science, I regret having spent most of my career not really understanding why functional programming is so important. I knew it was the oldest paradigm around, but assumed that it was just for mathematicians and scientists – business software needed something far better: imperative programming and object-orientation. How naive was I?We’ll leave a discussion about why functional programming is so good for a future post. In the meantime, I can recommend both these books. “Foundations” is more readable, and “Expert” more thorough (neither assume any previous F# knowledge).

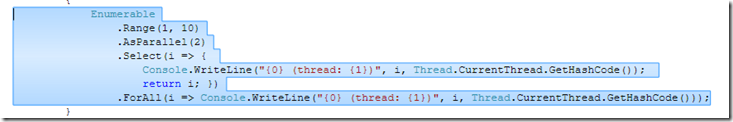

There was lots of talk at Tech-Ed last week about concurrency and parallel programming. It’s becoming a real issue because we’re in the middle of a shift in the way

There was lots of talk at Tech-Ed last week about concurrency and parallel programming. It’s becoming a real issue because we’re in the middle of a shift in the way